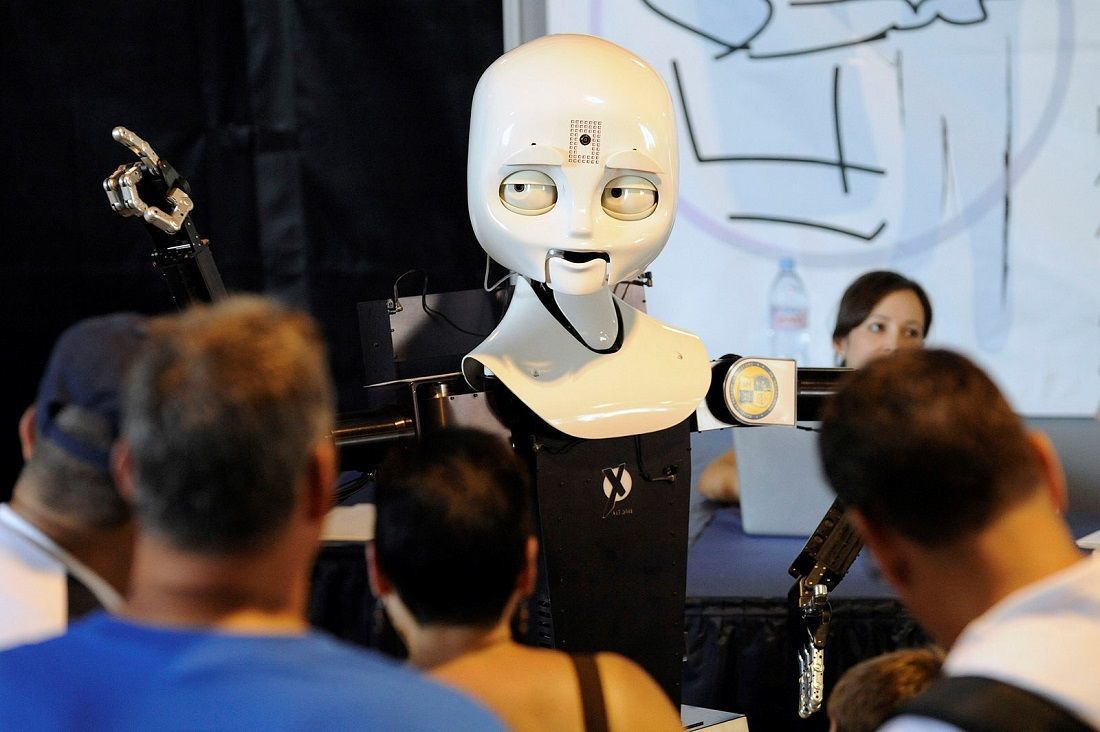

When Pascal constructed his calculating machine in 1642, it did not matter that the thing looked like a jewelry box. The “Pascaline” was not meant to simulate human appearance but to perform a function previously possible only for the human mind. In contrast, it matters very much to some present-day robot-makers and users in rather different commercial spheres, such as markets for artificial friends or lovers, that their creations can simulate the look and feel of a human being well enough to satisfy a customer—for a few moments at least. Engineers are working to make robots sufficiently lifelike to make a person forget about their willing suspension of disbelief, or to have diminished qualms about interacting with a machine as if it were a human.

Here I would like to provide some taxonomic distinctions to clarify our discussion. The difference between Pascal’s invention and the goal of these robot-makers reflects the difference between what I would call computational artificial intelligence vs. complete artificial intelligence. The Pascaline, and computers in general, could rightly be called a form of artificial intelligence in three different ways: first, they are an extension of Pascal’s intelligence, artifacts that physically house within themselves some of the intelligent thinking and engineering of their makers; second, they are instruments and substitutes for the intelligence of various users: those who do not know math very well, those who do not know engineering, those without the energy to make the complex equations; third, the machines make products that are intelligent: their output is in accordance with reason. So it seems to me that there are good reasons to hold that computational artificial intelligence not only can exist but has existed for some time, and will continue to become more robust in the future.

If we consider the robot itself, in order for it have complete artificial intelligence, a genuine intelligence as great or greater than that of its creators, it will need more than the ability to pass some Turing Test of external behavior. It will need to have an interior life, including a personal identity. Is this kind of artificial intelligence possible? To sharpen our focus, we can hone in on the material differences between robots and humans, and what consequences that may entail for their respective self-understanding and consequent behavior.

The argument is as follows:

- Robots differ from humans greatly, because humans are animals while robots are not.

- Things that differ biologically also think about themselves differently and act accordingly.

- Robots will always think and act differently from humans, in irreducibly important ways.

The first premise is uncontroversial. Although we can abstract from human animality in order to compare robot computation with human computation, for all practical purposes we act as if humans are more than mere minds: our bodies are parts of ourselves, if not our entire selves from when thinking comes.

The second and third premises is where we find the fireworks. Let us consider both in turn.

I am claiming that things that differ biologically also think and act differently. Now, behavior changes with biological-physical structure if some behavior is always caused by physicality as a necessary and sufficient cause, and cannot be caused by anything other than the physical structure. Likewise, thoughts change with biological-physical change when the thoughts themselves are caused by the physical structure. When might these cases pertain?

The place in which thinking and behavior certainly differ on account of different physiology is when, first, the thinking is a reflection about naturally possessing a natural human body and belonging to series of biological families; second, when the thinker performs some behavior solely as a consequence of that self-reflection.

Here is what I mean about self-reflection that one belongs to a series of biological families: On the immediate level, this means sharing a genetic code with a mother and a father. Further down the family tree are grandparents, great-grandparents, and so on, among whom we can identify family traits. We need not be genetic determinists to hold that our genes, and their epigenetic expression, must have effects on our behavior that persist through time can mean that we are sickly like our uncle, have grandmother’s dark eyes, enjoy carrots like mother, and perhaps even have musical proclivities like generations of the Bach family.

On a wider scale, inheritance studies have shown that all living humans share a phylogenic history: we are physically linked to a few common ancestors. Each of our living cells throughout our body contains a portion of material that unites us with every other person now living. Geneticists have been able to reconstruct DNA sequences from our ancestors, inferring that all currently living males can be traced back to an individual male. He has been dubbed Y-chromosomal Adam. Through a similar process, research has shown that there is common ancestor for all women traceable through the mitochondrial DNA that is passed on from mother to daughter. She is called Mitochondrial Eve.

On an even wider scale, and a more fundamental level, evolutionary studies suggest that all organic life on Earth can be traced back to a common material starting point. We belong to what one could call the “Family of the Living”: we have organic continuity with past humans and all other living things. Without needing to advert to global explanations, such as Dawkins’s selfish gene, or emotional schemas of evolutionary psychologists John Tooby and Leda Cosmides, we may uncontroversially hold that our biology affects our inclinations, feelings, and behaviors. Millenia of implicitly learned lessons are encoded in our every cell, and they affect our daily lives, helping us live a life that has been adapted to difficulties our ancestors faced.

When our place within a family rises to the level our consciousness, we have the choice to reject our inheritance and rebel against it, or to embrace and develop it, or to choose an entirely different way to identify ourselves, but these sorts of thoughts are not available to robots. Even if a robot achieved self-consciousness, it could not reflect on having a naturally organic body.

In their present state, robots have none of this biological identity, for they do not have organic life. By organic life I mean some interior principle of movement, nutrition, self-maintenance, and reproduction that has been passed on through a material process of generation from another living thing. Living things grow, maintain homeostasis by complex processes of metabolism, excretion, and respiration and are subject to decay, which includes loss of cellular function and the global life of the organism as a whole. Robots, instead, are constructed, manufactured—literally made by hand, at least before they are mass-produced in a factory. Their hardware is not organically-linked: wires may sinew through a synthetic casing and connect photosensitive receptors and pressure/temperature receptors to complex circuit boards and batteries. But no material part of a robot is a living cell that exists in relation to trillions of other living cells. No part of a robot is governed by the life of robot as a whole such that if its complex arrangement of parts is severely rearranged it will die and the cells also will quickly die. If we lose a limb and have another sewn on, even our body know the part is not quite us, which is why patients with transplanted organs must take anti-rejection medication. In contrast, robots have pre-made bodies, with a size and shape that remain constant through time, whose maintenance is not on account of well-functioning chemical processes, which are not subject to loss of cellular function and death. A robot on the physical level is like the ship of Theseus: any part can be swapped for another part of similar material and congruous shape and still retain its function. A robot, if it were self-aware, still could not say, “I am somebody.”

Robotic software directs its hardware regarding how to respond to stimuli; in the case of machine-learning, how to respond in ever more sophisticated ways. The software is not encoded into the various parts of the robot, as is the genetic code for an organism; it was not received from another robot; and it is not the source of power that moves a robot. Rather, the software was written by a human on a computer and then downloaded to a hard drive which requires electrical current to function. Ancient and medieval philosophers debated about whether or not the soul was equivalent to the mind. Thomists, for example, understood the single soul as the principle that gave life to a human and the mind as that which makes us most human—so for them the life-principle is the same as the rational principle. Scotists, however, disagreed and held that people are actuated by a plurality of souls which function distinctly but harmoniously together. No similar debate could plausibly arise regarding a robot. It must be run by electricity, which is arguably a sort of life-principle that makes it possible for its hardware and software to run. But electrical force is undoubtedly distinct from the program that makes the robot behave in one way or another. One indication of this is that each individual robot needs its own energy source in order to actuate the use and movements of its parts, whereas many robots can simultaneously possess the same program that runs them all. Robots need not ask themselves if they souls that can exist without their bodies, whereas this is a perennial question for humans.

I have noted that robots do not develop biologically over time. This reality entails that their artificial intelligence does not develop along with and because of their bodily development through an entire lifespan. The cognitive abilities of humans, including the capacity for self-understanding, in contrast, develops as the neurons develop. Shortly after birth, the number of synapses in macaque monkey brains grows by about 40,000 per second. It is similar for us. For humans, the volume of brain growth is correlated to performance in vocabulary tests, self-control, and positive adaptive behaviors. Childhood development researchers, such as Lynne Murray in Oxford, could show how our physical growth makes an enormous difference to our self-understanding and psychology in general. We humans are creatures with a past: we were young once, and smaller too. As we grew in size, our understanding of the world and ourselves matured. In contrast, if robots can be said to learn, they do so in such a way that their body remains the same while learning takes place. They were not smaller; they did not experience the process of growing up; they did not look forward to being a “big boy” like daddy or a “big girl” like mommy; they did not undergo a development of character along with physical strength and size, and reproductive systems.

Artificial intelligence, if such will come to exist, will necessary percolate outside of the twin contexts of biological development and identity as a member of a biological family rooted in the origins of life on earth. A robot would not have to deal with the Oedipus Complex, the projection of infantile desires, and the repression of childhood memories. Nor would it share with a mother a proclivity for certain kinds of food, or with a father a disposition toward energetic—or lethargic—action. It could not understand itself by considering the health or disease of its ancestors, and how they suffered or succeed over the centuries. There is no robotic Adam, no robot Eve. A robot would not need to grapple with difficulties entailed by selfish genes or emotional responses are similar to its evolutionary cousins—the beasts in the woods.

My contention, then, is that we become what we are through the interaction of an historically-embedded nature, sociobiological nurture, and self-determination through time. I have focused on the “nature” part of the equation. Given the wide differences that exist between humans and robots on the level of self-understanding, there are a few options for closing the gap.

First, humans could resort to a nurturing process. Nurture by definition does not consist in what a thing is on its own, but in what it has been given: nurture shapes a creature from outside. Purposeful nurture is more than merely supplying stimuli and data. A being of some intelligence, even if only a wolf or a bird, selects from among various perceptible things and signals to its young to pay attention to some and not others. It does this in order to train its behavior, judgment, feelings, and perhaps thoughts. Engineers could try to nurture robots similar to the way Francine Patterson laboriously taught Koko the gorilla a form of sign language. But this possibility remains theoretical, except perhaps for cases where people want to raise a robot like they would a puppy or a child. This indicates that in the realm of robotics and artificial intelligence nurture is negligible whereas nature is just about everything.

A second method, therefore, would be to change the software component of a robot’s nature. To tweak a robot’s behavior, it is much easier, predictable, and inexpensive to change the code that runs it. Hence, artificial memories could be implanted in robots, as we see in the case of androids in the TV series Westworld, and more generally robots can be implanted with a program that mimics the psychological product of development. In order for this mimicry to work, scientists must be able to accurately understand, identify, isolate, and replicate the most important elements of human development as it occurs in the womb, infancy, childhood, teenage years, and so on. No small series of tasks.

A third method could seemingly escape getting caught up in the horns of the second solution. It would be to integrate the organic and inorganic so that the hardware of the robotic would also be “wetware,” that is, actual living material united with manufactured components. Isaac Asimov depicts one version of this union in his novella The Bicentennial Man. There an android slowly changes himself from robot to human via a series of surgeries made possible by robobiology, the study of organic robots, and the mass production of human-derived organs and prosthetics made of flesh.

As difficult it would be to implement three methods, there are good reasons to believe that they would not achieve their end, namely, give a robot the power to have the same self-understanding that a human would have when reflecting on himself. One reason for this is that robots will almost certainly have greater computational power than humans, at least when it comes to some problem-solving tasks. Another reason, more profound, is that if a robot were to have behavioral functions similar to that of humans, they will eventually have perfections that we do not. Perhaps they will be able to fly, or detect infra-red spectra, or shoot lasers from their eye-sockets or palms, or have gargantuan strength. Even if they were united to flesh, as Asimov suggests, they would likely not be subject to all of the diseases and difficulties that we face, for they could have mechanical and bio-functional advantages, or at least mental capabilities, that mere evolved flesh-and-blood cannot reach on its own.

Robots in this case would not see themselves as our equals. They could not.

Therefore, they would either see themselves as beneath us or above us. Perhaps they would see themselves as beneath us, the way children respect their elders and a creature respects its creator. The term “robot,” after all, was coined from the Czech word for servitude. But more likely, that they would see themselves as above us—for they would be stronger, smarter, more self-controlled, more powerful. They would know that they are not like us, and they would use that knowledge to their advantage, if not directly for self-preservation at least for programmed benevolent ends bound up their continued existence. Certainly they could control many of us, for artificial intelligence knows how to manipulate human behavior—consider the influence Facebook algorithms have on your knowledge of the news. Perhaps they could circumvent our attempts to destroy them. If that awful day should dawn, I believe many humans would kneel down and worship the idols of their own creation, those robots without families. But even if this pessimistic outcome does not come to pass, it is hard to escape the logic of the syllogism that guided most of this paper:

- Robots differ from humans greatly, because humans are animals while robots are not.

- Things that differ biologically also think about themselves differently and act accordingly.

- Robots will always think and act differently from humans, in irreducibly important ways.

EDITORIAL NOTE: An earlier version of this paper was delivered at the Singularity Summit at Jesus College, Cambridge University UK, 28 September 2018.